Inquiry into the use of generative artificial intelligence in the Australian education system

In January 2024 I had the opportunity to provide evidence as a witness to the House Standing Committee on Employment, Education and Training’s inquiry into the use of generative artificial intelligence in the Australian education system

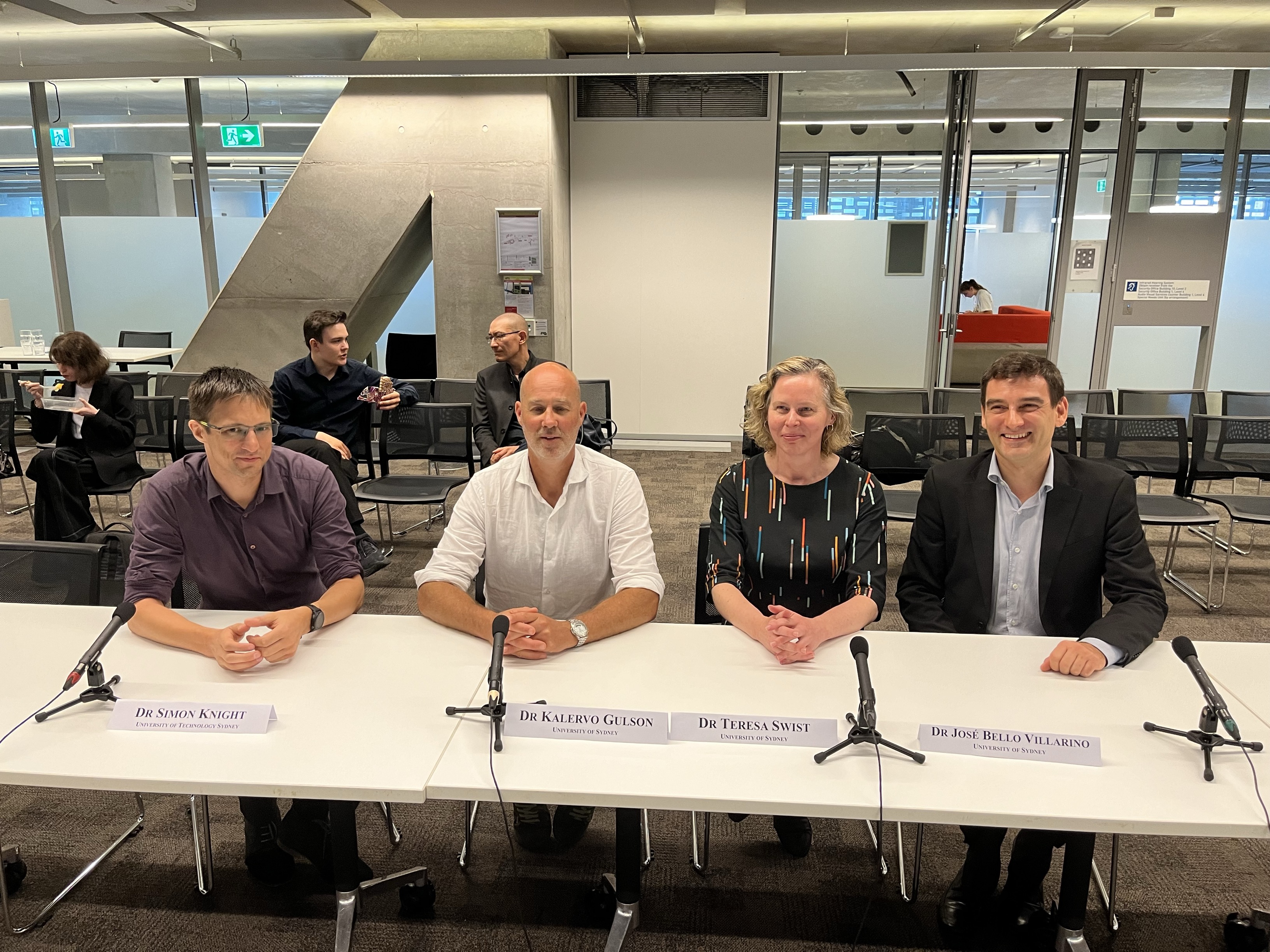

I presented (with colleagues from the Sydney Education Futures Studio and Policy Lab speaking to their inquiry submission (to which I contributed). I primarily spoke in my capacity as Director of the UTS Centre for Research in a Digital Society (CREDS), and spoke to the CREDS inquiry submission. In my substantive position is as an associate professor in the UTS Transdisciplinary School I also contributed to the UTS inquiry submission.

With CREDS colleagues we also did some analysis of all submissions to the inquiry, published in AJET:

Knight, S., Dickson-Deane, C., Heggart, K., Kitto, K., Çetindamar Kozanoğlu, D., Maher, D., Narayan, B., & Zarrabi, F. (2023). Generative AI in the Australian education system: An open data set of stakeholder recommendations and emerging analysis from a public inquiry. Australasian Journal of Educational Technology, 39(5), 101–124. https://doi.org/10.14742/ajet.8922

Opening remarks

My opening remarks are below:

Thank you for the invitation today. I’d like to acknowledge the Gadigal people of the Eora nation and Elders past and present.

I’ll open by giving three ways to frame genAI in learning, with distinctive implications:

- First, education is one area where genAI will have impact, including on how we teach and learn. The practices and tools marking this shift will develop alongside each other. To support that development, policy and methods are needed to support evidence generation regarding those tools and practices, and avenues to share this knowledge.

- Second, of course what we teach will also shift reflecting changes in society and labour markets; this is a cross-sector and discipline challenge and understanding how to support professional learning in this context will need coordination and dynamism.

- Third, and I want to emphasise this one, because to understand ethical engagement with AI, we need to understand how people learn about AI and its applications, because this underpins meaningful stakeholder participation, how real ‘informed consent’ is, or whether ‘explainable AI’ actually achieves its end -i.e., is understandable AI. These are crucial for AI that fosters human autonomy. For this, we need sector-based guidelines with examples and ways to share practical cases.

Navigating the genAI in education discourse over the last 18 months, we’re faced with two contradictory concerns: concern regarding the unknown (we don’t know enough, ‘unprecedented’ change, etc); and the known (sure statements that AI can do x, or will lead to y). Strong claims in either space should be tempered; we have examples of previous technologies, and we have existing regulatory models that apply now just as they did a year ago. We can learn from prior tech-hype and failures and use existing policy in many cases to tackle these novel challenges. Despite this, on the other side we don’t ‘know’ the efficacy of tools or their impact in many contexts, for example what the implications are of being able to offload ‘lower level’ skills that may be required for more advanced operations. These are open questions for research on learning, and this lack of evidence matters if we want people to make judgements about whether engaging is “worthwhile” – i.e., will genAI help us achieve our aims in education.