Me

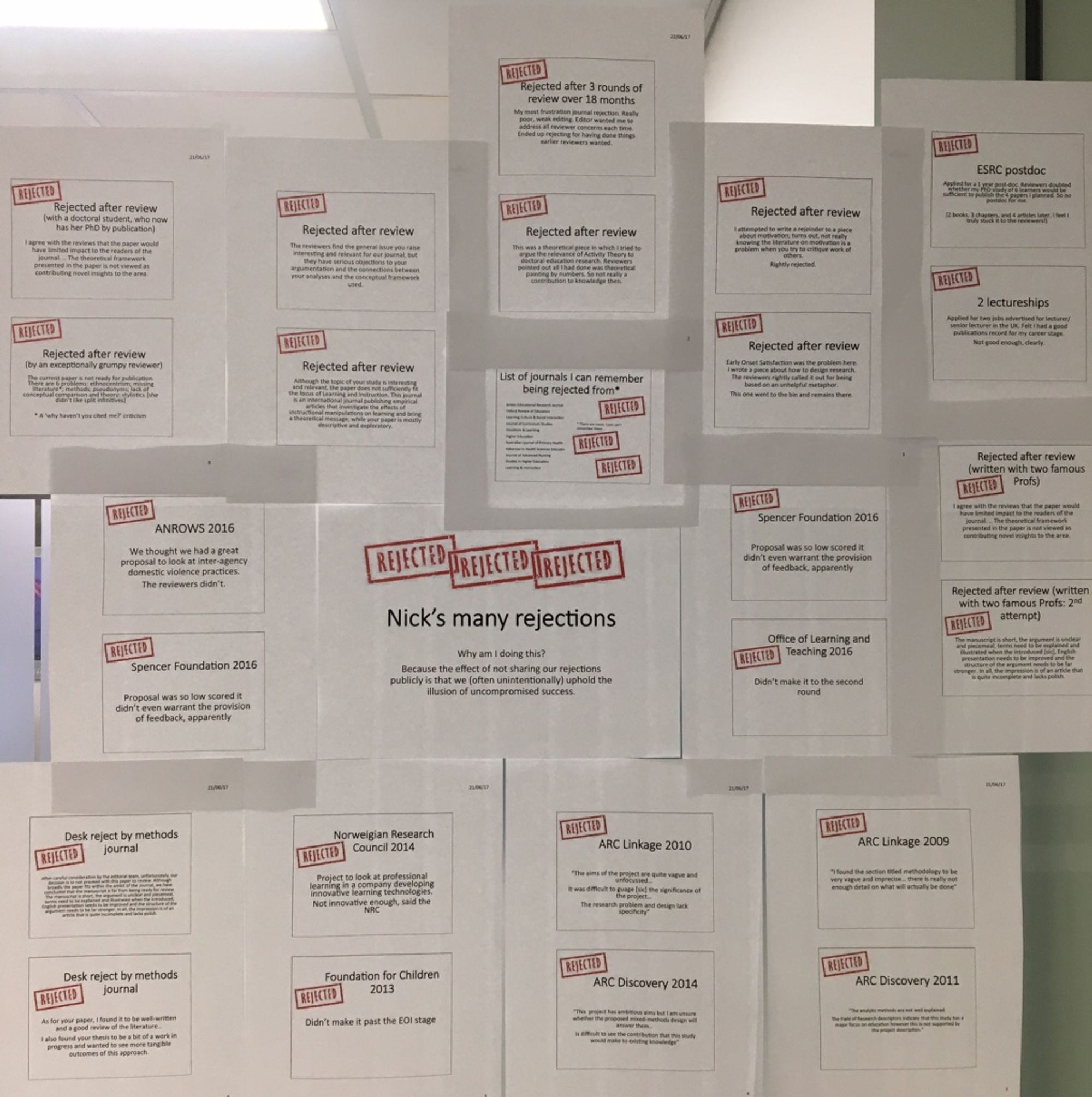

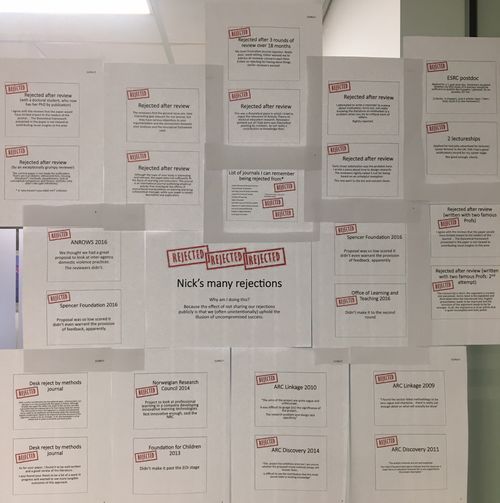

I ‘succeed’, and am in a privileged position in many respects, but the terrain is still rocky. It’s important to share because: (1) it helps us understand (and hopefully improve!) the process; (2) gives sense of proportion to setbacks; (3) gives strategies to tackle setbacks, and learn.

I know some stuff…

Was co-editor-in-chief of a journal for 6 years as we went through indexing process

Review for conferences (x4? annually) and journals (probably 6-10 a year post-editorship), and grants. Occasionally book proposals.

I write quite a lot and with a wide network; On gscholar ~3000 citations, H-index of 27, >100 things, and >70 venues.

But…In last 6 months,

5*desk rejects (PNAS→NatMI→PLOS; BDS→AIOS; EduRev→EduRev; ASIST→ILS)

2*reviewed rejects (1 on 3rd round→palcom, 1 on 2nd→ ??)

1*grant rejected (2nd round)

Desk rejects were:

Scope of topic (PNAS)

Method (review, ASIST)

Something else (BDS, EduRev)

And the papers…

Sharing challenges…

What’s the process look like?

Some useful links…

Think about the key things you want to be known for, and then any sub-things. Be pragmatic about getting things out. For a thesis, remember publications in the UK and Australian model can serve ‘dual purpose’ (this isn’t true in some systems) i.e., you can have a thesis that includes elements that have been previously published. BUT there may be copyright issues…http://sjgknight.com/finding-knowledge/2015-12-23-writing-your-thesis-and-using-your-publications

For PhD students consider creating a publication plan from your thesis https://patthomson.net/2012/09/03/writing-from-the-phd-thesis-letting-go/

Activity 1: Using the Miro (login, or use password peerreview), add:

1. Nightmares - bad experiences you’ve had with peer review

2. Dreams - great experiences you’ve had with peer review

3. Sage - sage advice you’d give in navigating peer review (or/and links)

Nightmares, Dreams, and Advice (based on Activity 1 above)

COPE cases about Peer Review

Shit My Reviewers Say: Examples of bad peer review

Some generic examples of poor review comments

Cite x,y,z (common author, obviously the reviewer)

Cite x (x hasn’t come out yet, it’s obviously by the reviewer)

You’ve misunderstood x (you authored x)

The authors should have x (x is a totally different, possibly appropriate, approach that has no bearing on the merits of the approach in fact taken)

The researchers are [some personal flaw] (e.g., my very first submission a reviewer said: “reads like it was written by an undergrad/masters student” (I was); either the reviewer thinks this is true, in which case they should be supportive, or they are just being insulting).

I am very cautious of inferring someone’s experience with English language (e.g., “the authors are probably not native speakers” is sometimes not maliciously intended, but could be, and is not always correct (see e.g., https://patthomson.net/2020/10/12/style-tone-and-grammar-native-speaker-bias-in-peer-reviews/ ); it’s fine to point out issues with the language, but don’t make inferences about competence).

“Throughout the paper they x”, without any examples of x

Lessons

focus on the work not the researcher, nor the work you would have done

you can suggest citing your own work, but be very cautious and consider adding other authors too (and flag that you’ve done this to the editor in the confidential comments)

authors cannot expect reviewers to do their job, but they can expect specific examples of issues, and sometimes it’s appropriate to provide suggested remedies

Responding to reviews: The good, the bad, and the ugly

Emotional. If your first response asks “did they even read the paper” that's probably ok, but while reviewers do make mistakes, so do authors; engage generously.

Make a table, I typically cluster similar comments and organise them so minor things are moved to the bottom. Number each comment and indicate who said it. Track changes on your doc, it is irritating to not know what was done or to have to check if any reviewer comments are missing.

In revising, consider what the reviewers are saying, and what that means about how you read the manuscript; they may have misunderstood but that may still require edits for clarification. Sometimes reviewers are wrong, and it's ok to explain why comments have not been actioned.

include an overarching letter for any really big things (or where you have not actioned a significant comment)

you are writing to the editor but depending on decision it will go to reviewers.

Some material on responding to reviews

Mavrogenis, A.F., Quaile, A. & Scarlat, M.M. The good, the bad and the rude peer-review. International Orthopaedics (SICOT) 44, 413–415 (2020). https://doi.org/10.1007/s00264-020-04504-1

Noble WS (2017) Ten simple rules for writing a response to reviewers. PLoS Comput Biol 13(10): e1005730. https://doi.org/10.1371/journal.pcbi.1005730

How to respond to difficult or negative peer-reviewer feedback. (2021, May 18). Nature Index. https://www.nature.com/nature-index/news/how-to-respond-difficult-negative-peer-reviewer-feedback

Sample docs

Cover letter

Response to reviews letter

Examples of responses to reviews

There are some samples here:

this seems to be a case where a reviewer is particularly critical of how their own work is cited in the new paper https://royalsocietypublishing.org/action/downloadSupplement?doi=10.1098/rsos.181868&file=rsos181868_review_history.pdf (appendix a, c, and d are all response files).

a short discussion of a specific case (misunderstanding re method) https://academia.stackexchange.com/questions/28054/responding-to-a-reviewer-who-misunderstood-key-concepts-of-a-paper

Some examples of responses to generic reviewer feedback https://apastyle.apa.org/style-grammar-guidelines/research-publication/sample-response-reviewers.pdf

“R1 indicates that they interpret method of evaluation current designs as a weak approach to user centred design (point #3). However, we do not make a claim to be conducting user analysis of these tools. While users of course matter to the design and evaluation of technologies (and understanding transparency), our analysis is not in this tradition. R1 also suggests that we over-emphasise the importance of these technologies, however their significance is well established in the literature (e.g. REFERENCES).”

“R1 mistakenly equates our method of evaluate current designs according to ethical principles as a weak attempt at user centered design (point #3). But we never claimed to be doing a user analysis of the tools. R1 complains that we over-emphasise the importance of these technologies, when their influence is already well established in the literature (e.g. REFERENCES).”

"There seems to be a misunderstanding about the methodology, which we hope to clear through our responses below and by revising the text in the manuscript. Contrary to what the reviewer states, ...” (from academia.stackexchange, cc-by-sa license)

"This comment completely misrepresent the methodology described in the paper." (from academia.stackexchange, cc-by-sa license)

Reviewer one frames the work thus: “They argue that no study has to date examined the ways that x-related projects engage with the y, or its adequacy for this context.” Their comments rest on the concern that the paper does not adequately situate the background (comment n1) and then findings (comment n2) in relation to existing research on “topic related to x”. However, we believe this is a misreading of the intent of the paper. While certainly the wider scope of ‘x’ topics is relevant to aspects of this work, our claim is not that “no study has to date examined the ways that x-related projects engage with the y, or its adequacy for this context.”. Instead our claim – warranted by three recent studies – is that there are gaps in the narrower related x2 space, and a need to better understand this space. That is, while certainly there is interesting and valuable work regarding the wider x-system, including those suggested of [one author who appeared in 3 of 4 items suggested by the reviewer] and colleagues, as we set out below, in that context and given the constraints of space, we do not believe the suggestions are within scope for the paper. If this view is not what was intended, we would of course be happy to review our position with provision of further clarification.

???

"We disagree with the reviewer's comment about our methodology. Our methods are standard in the field and have been used successfully in previous studies. No changes to the methodology are necessary.” (chatGPT prompted)

"We appreciate the reviewer's feedback on the methodology. In response, we have decided to completely overhaul our analysis and use a more advanced statistical approach. As suggested we have replaced our regression analysis with a machine learning approach.” (chatGPT prompted)

"We thank the reviewer for their comment on our methodology. While our methods are indeed traditional, they are well-established in the field for analyzing the type of data we collected, and as [xy, 2017) demonstrate they provide robust results against comparators. To illustrate this point, we ran preliminary analysis for comparison (see below) which demonstrates the validity of our results.” (chatGPT prompted)